Watch us live over at https://www.twitch.tv/rallysecurity

RallySec ExtraLife

2019-10-01 00:00:00 -0400

On Oct 1st 2019, I announced that we are organizing a D&D 5E event supporting the ExtraLife charity. Trimarc Security https://www.trimarcsecurity.com/ and Rendition Infosec https://www.renditioninfosec.com/ have chosen to sponsor this event, and the players are donating their time and help organizing and creating it. This is going to be fun!

How can we help?

If you can help financially, donating directly to the campaign http://bit.ly/RallySec-ExtraLife is going to help the kids a lot.

Even if you can’t help monetarily, helping with retweets or shares will get the word out and bring awareness, which will help a lot!

What it is

We’re going to be streaming the game live via Twitch https://twitch.tv/rallysecurity Viewers and Donors can both help the kids and affect the game directly by donating to ExtraLife, and all proceeds donated from Twitch subscriptions and bits are being given to the RallySec ExtraLife campaign at the end of the night.

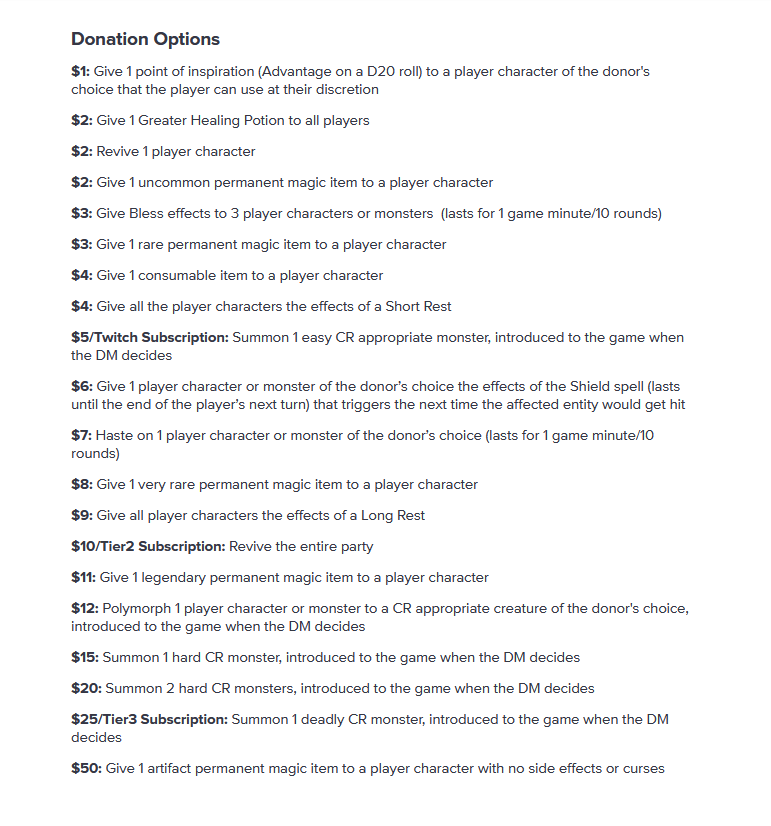

When you donate, you get to pick from a list of priced actions or effects that the donor can have on the game as seen on the following chart:

When is it?

Oct 19th from 2:30pm EDT to 7:45pm EDT. Our loose schedule of events is below:

- 2:30pm - Start stream

- 2:45pm - Introduction to the stream, what it is, and what its for. Big thanks all around!

- 3:00pm - Kudos to Trimarc Security https://www.trimarcsecurity.com/ and Rendition Infosec https://www.renditioninfosec.com/ for sponsoring

- 3:05pm - Introduce players and their characters

- 3:10pm - Story begins

- 4:00pm - 10 minute break

- 4:10pm - Story continues

- 5:00pm - 30 minute mid-stream break

- 5:30pm - Story concludes

- 6:20pm - 10 minute break

- 6:30pm - D&D Battle Royale, Free for all in the arena, Twitchchat gets to play a monster

- 7:20pm - Tally total donations

- 7:30pm - Thank donors and Sponsors, players tell us where we can follow them on social media!

- 7:45pm - End stream

-Ben

About the Author

Ben Heise (@benheise) is an information security professional who specializes in performing penetration testing, adversarial (red team) operations, and studying the history, tools, techniques, and procedures of “APTs”. He’s a US Army veteran looking to give back to the community, help others, and make the world a better place. His blog is over at https://benjaminheise.com

Tweet